Invisible Editors: Impact of AI on Media Content Quality and Trust

Загрузки

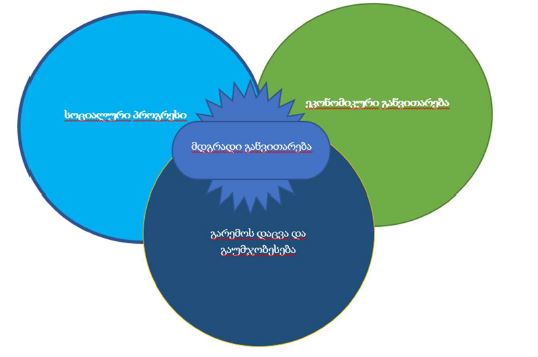

AI technologies such as machine learning, natural language processing, and automated journalism are rapidly transforming how media content is produced, distributed, and consumed. These tools promise greater efficiency, for example, automated news writing and personalized content recommendations, and enable real-time delivery of information. At the same time, their integration into media workflows has intensified concerns about the circulation of biased, low-quality, irrelevant information and disinformation. Because AI systems learn from existing data, they can reproduce and even amplify the biases embedded in that data, while accelerating the spread of disinformation and undermining trust in media institutions.

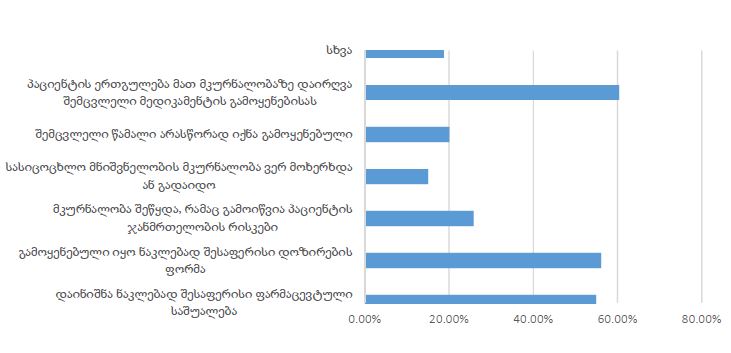

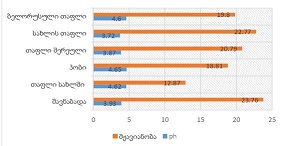

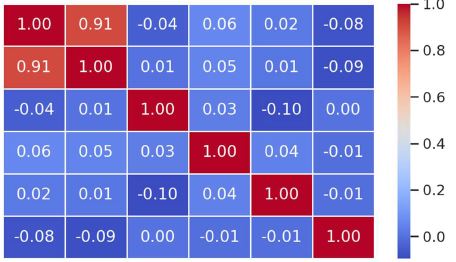

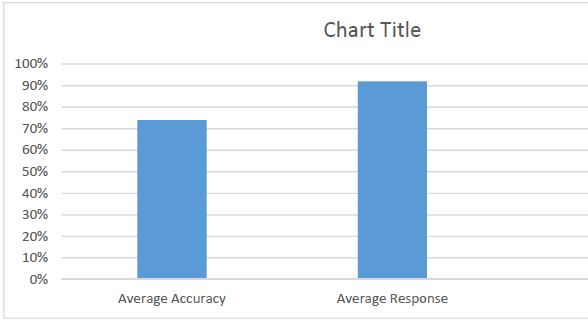

This study examines how AI becomes an invisible gatekeeper, contributes to biased and irrelevant media content and explores the consequences for public trust and democratic discourse. Using a mixed-methods design, including: content analysis of AI-generated and AI-curated media, an online survey, and secondary data, the research shows that media practitioners and audiences recognize both the transformative potential of AI and its ethical risks. A strong majority of survey participants perceive AI’s impact on media as significant or very significant and associate AI algorithms with the spread of biased or low-quality information across news and social platforms. Respondents also express substantial concern about the opacity and limited accountability of AI systems in shaping what information people see.

The findings point to an urgent need for strategies that reduce AI-induced bias and improve information quality, such as enhancing algorithmic transparency, diversifying training data, and developing clear regulatory and ethical frameworks. Drawing on media and communication theories, this article offers a critical analysis of AI’s role in contemporary media and outlines pathways for more responsible and accountable use of AI in the information ecosystem.

Скачивания

AI language models are rife with different political biases. (2023, August 7). MIT Technology Review. Retrieved from https://www.technologyreview.com/2023/08/07/1077324/ai-language-models-are-rife-with-political-biases/ (last seen: 20.11.2025)

Almakaty, S. S. (2025). Gatekeeper theory in the digital media age: A comprehensive and critical literature review. Preprints. https://doi.org/10.20944/preprints202510.2484.v1 (last seen: 27.11.2025)

Allcott, H., & Gentzkow, M. (2017). Social Media and Fake News in the 2016 Election. Journal of Economic Perspectives, 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211 (last seen: 20.11.2025)

Bandura, A. (1977). Social Learning Theory. Englewood Cliffs, NJ: Prentice-Hall.

Chesney, R., & Citron, D. (2018). Deepfakes and the New Disinformation War. Foreign Affairs, 98(1), 147–155. Retrieved from https://www.foreignaffairs.com/articles/world/2018-12-11/deepfakes-and-new-disinformation-war (last seen: 20.11.2025)

Council of the European Union. (2024, May 21). Artificial Intelligence Act: Council gives final green light to the first worldwide rules on AI [Press release]. Retrieved from https://www.consilium.europa.eu/en/press/press-releases/2024/05/21/artificial-intelligence-ai-act-council-gives-final-green-light-to-the-first-worldwide-rules-on-ai/ (last seen: 20.11.2025)

Datta, A., Tschantz, M. C., & Datta, A. (2015). Automated Experiments on Ad Privacy Settings. Proceedings on Privacy Enhancing Technologies, 2015(1), 92–112. https://doi.org/10.1515/popets-2015-0007 (last seen: 20.11.2025)

Faverio, M., & Tyson, A. (2023, November 21). What the data says about Americans’ views of artificial intelligence. Pew Research Center. Retrieved from https://www.pewresearch.org/short-reads/2023/11/21/what-the-data-says-about-americans-views-of-artificial-intelligence/ (last seen: 20.11.2025)

Gersamia M., Gigauri E., Gersamia M., Media Environment 2025: Navigating During Media Capture in Georgia, Media and Communication Educational and Research Center - “Media Voice”, Media Voice - Europe, 2025.

Howard, P. N., & Kollanyi, B. (2016). Bots, #StrongerIn, and #Brexit: Computational Propaganda during the UK–EU Referendum. SSRN Electronic Journal. http://dx.doi.org/10.2139/ssrn.2798311 (last seen: 20.11.2025)

Lewin, K. (1947). Frontiers in group dynamics: II. Channels of group life; social planning and action research. Human Relations, 1(2), 143–153. https://doi.org/10.1177/001872674700100201 (last seen: 27.11.2025)

McLuhan, M. (1964). Understanding Media: The Extensions of Man. New York: McGraw-Hill.

Meraz, S., & Papacharissi, Z. (2013). Networked gatekeeping and networked framing on #Egypt. The International Journal of Press/Politics, 18(2), 138–166. https://doi.org/10.1177/1940161212474472 (last seen: 27.11.2025)

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447–453. https://doi.org/10.1126/science.aax2342 (last seen: 20.11.2025)

Postman, N. (1979). Teaching as a conserving activity. Delacorte Press.

Reuters. (2018, October 10). Insight: Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. Retrieved from https://www.reuters.com/article/worldNews/idUSKCN1MK0AH (last seen: 20.11.2025)

Shoemaker, P. J. (2020). Gatekeeping and journalism. In J. Nussbaum (Ed.), Oxford research encyclopedia of communication. Oxford University Press. https://doi.org/10.1093/acrefore/9780190228613.013.819 (last seen: 27.11.2025)

Thorson, K., & Wells, C. (2016). Curated flows: A framework for mapping media exposure in the digital age. Communication Theory, 26(3), 309–328. https://doi.org/10.1111/comt.12087 (last seen: 27.11.2025)

van Dalen, A. (2023). Algorithmic gatekeeping for professional communicators: Power, trust, and legitimacy. Routledge. https://doi.org/10.4324/9781003375258 (last seen: 27.11.2025)

White House Office of Science and Technology Policy (OSTP). (2022). Blueprint for an AI Bill of Rights: Making Automated Systems Work for the American People. Retrieved from https://bidenwhitehouse.archives.gov/ostp/ai-bill-of-rights/ (last seen: 20.11.2025)

Wallace, J. (2018). Modelling contemporary gatekeeping: The rise of individuals, algorithms and platforms in digital news dissemination. Digital Journalism, 6(3), 274–293. https://doi.org/10.1080/21670811.2017.1343648 (last seen: 27.11.2025)

White, D. M. (1950). The “gate keeper”: A case study in the selection of news. Journalism Quarterly, 27(4), 383–390. https://doi.org/10.1177/107769905002700403 (last seen: 27.11.2025)

Zellers, R., Holtzman, A., Rashkin, H., Bisk, Y., Farhadi, A., Roesner, F., & Choi, Y. (2019). Defending against neural fake news. In Advances in Neural Information Processing Systems (Vol. 32). Retrieved from https://papers.nips.cc/paper_files/paper/2019/hash/3e9f0fc9b2f89e043bc6233994dfcf76-Abstract.html (last seen: 20.11.2025)

Copyright (c) 2025 Georgian Scientists

Это произведение доступно по лицензии Creative Commons «Attribution-NonCommercial-NoDerivatives» («Атрибуция — Некоммерческое использование — Без производных произведений») 4.0 Всемирная.